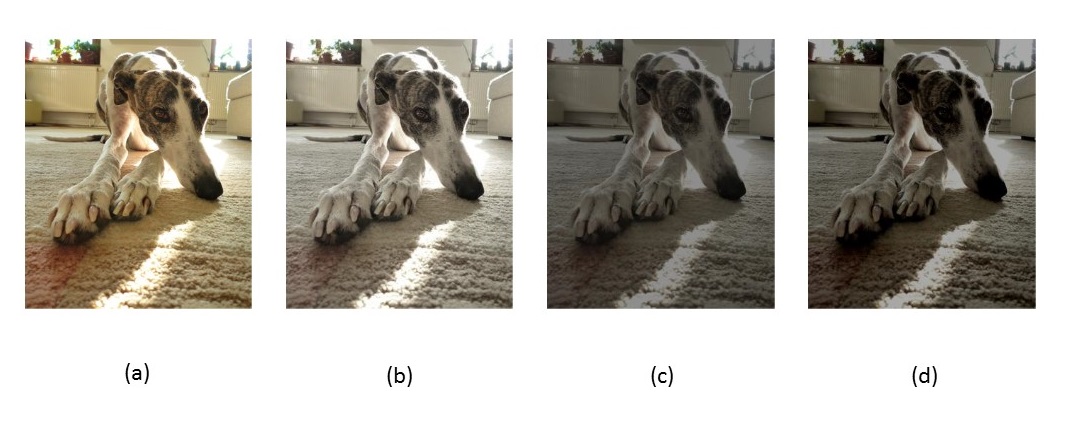

Example from end user domain: Gradually changing a photo to achieve a desired effect.

In order to achieve a particular result, services can be either composed manually or automatically (with and without a learning component). In case of manual composition, only signature information of services is considered in order to ensure the construction of composed services that can be executed. Deciding whether a combination of image processing services is reasonable or not is completely up to the user.

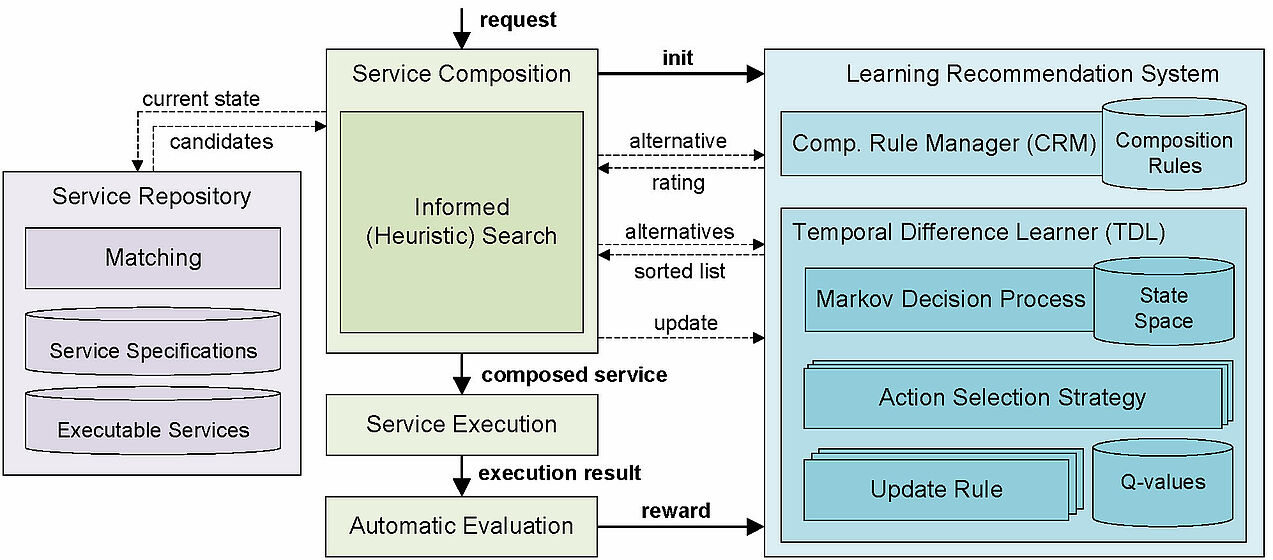

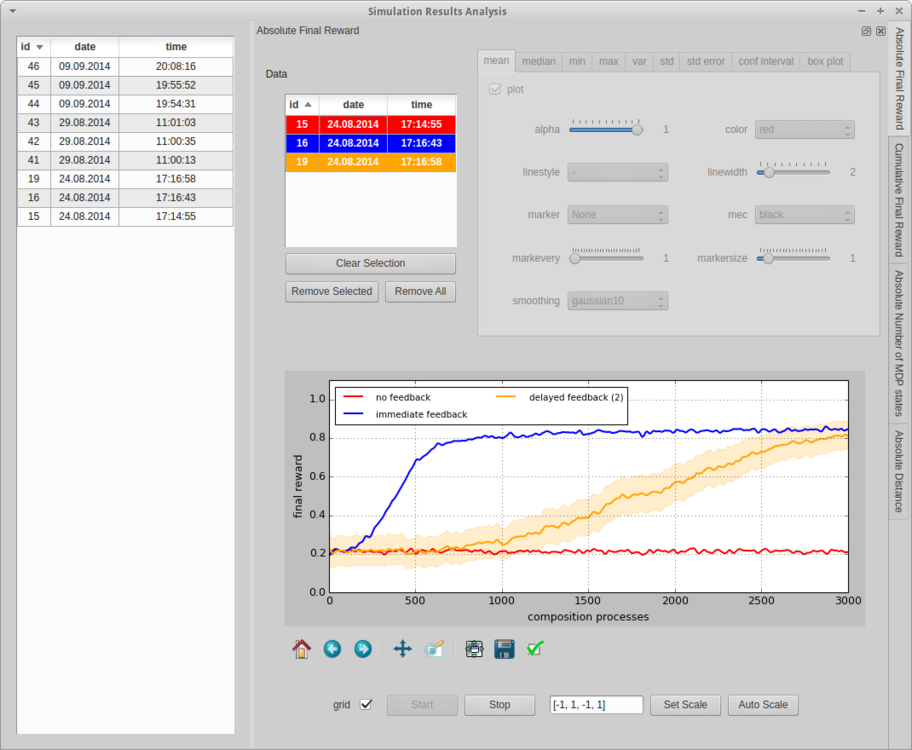

In case of automatic composition without learning, besides signature information, our adaptive service composition framework (see below) additionally considers semantic information specified as pre- and postconditions. By doing so, more reasonable solutions can be composed. When additionally incorporating the learning module of our adaptive service composition framework, the composition process is improved over time (in increasing numbers of composition processes) in order to identify the best solutions out of the set of reasonable solutions.

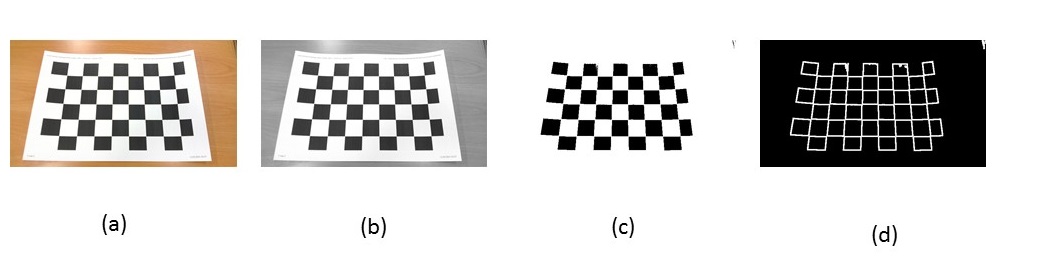

Example from expert/technical domain: Gradually changing an image to prepare the image content for subsequent information extraction.